* * * * * * *

"Big Data's Disparate Impact" introduces the computer science literature on data mining and proceeds through the various steps of solving a problem this way:

- defining the target variable,

- labeling and collecting the training data,

- feature selection, and

- making decisions on the basis of the resulting model.

To be sure, data mining is a very useful construct. It even has the potential to be a boon to those who would not discriminate, by formalizing decision-making processes and thus limiting the influence of individual bias.

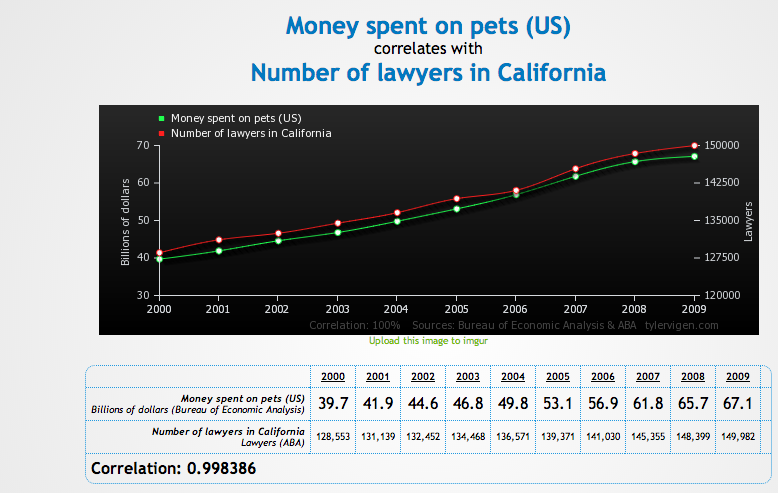

Data mining in such an instance addresses the issue of the "rogue recruiter," a recruiter who is biased, whether intentionally or not, against certain protected classes. Employers and testing companies argue that replacing the rogue recruiter with an algorithmic-based decision model will eliminate the biased hiring practices of that recruiter.But where data mining does perpetuate discrimination, society does not have a ready answer for what to do about it.

The simple fact that hiring decisions are made "by computers" does not mean the decisions are not subject to bias. Human judgment is subject to an automation bias, which fosters a tendency to disregard or not search for contradictory information insight of a computer-generated solution that is accepted as correct. Such bias has been found to be most pronounced when computer technology fails to flag a problem.

The use of technology systems to hardwire workforce analytics raises a number of fundamental issues regarding the translation of legal mandates, psychological models and business practices into computer code and the resulting distortions. These translation distortions arise from the organizational and social context in which translation occurs; choices embody biases that exist independently, and usually prior to the creation of the system. And they arise as well from the nature of the technology itself and the attempt to make human constructs amenable to computers. (Please see What Gets Lost? Risks of Translating Psychological Models and Legal Requirements to Computer Code.)

Defining the Target Variable and Class Labels

In contrast to those traditional forms of data analysis that simply return records or summary statistics in response to a specific query, data mining attempts to locate statistical relationships in a dataset. In particular, it automates the process of discovering useful patterns, revealing regularities upon which subsequent decision-making can rely. The accumulated set of discovered relationships is commonly called a “model,” and these models can be employed to automate the process of classifying entities or activities of interest, estimating the value of unobserved variables, or predicting future outcomes.

[B]y exposing so-called “machine learning” algorithms to examples of the cases of interest, the algorithm “learns” which related attributes or activities can serve as potential proxies for those qualities or outcomes of interest. In the machine learning and data mining literature, these states or outcomes of interest are known as “target variables.”

The proper specification of the target variable is frequently not obvious, and it is the data miner’s task to define it. In doing so, data miners must translate some amorphous problem into a question that can be expressed in more formal terms that computers can parse. In particular, data miners must determine how to solve the problem at hand by translating it into a question about the value of some target variable.

This initial step requires a data miner to “understand[] the project objectives and requirements from a business perspective [and] then convert[] this knowledge into a data mining problem definition.” Through this necessarily subjective process of translation, though, data miners may unintentionally parse the problem and define the target variable in such a way that protected classes happen to be subject to systematically less favorable determinations.

Kenexa, an employment assessment company purchased by IBM in December 2012, believes that a lengthy commute raises the risk of attrition in call-center and fast-food jobs. It asks applicants for call-center and fast-food jobs to describe their commute by picking options ranging from "less than 10 minutes" to "more than 45 minutes.

The longer the commute, the lower their recommendation score for these jobs, says Jeff Weekley, who oversees the assessments. Applicants also can be asked how long they have been at their current address and how many times they have moved. People who move more frequently "have a higher likelihood of leaving," Mr. Weekley said.

Are there any groups of people who might live farther from the work site and may move more frequently than others? Yes, lower-income persons, disproportionately women, Black, Hispanic and the mentally ill (all, protected classes). They can't afford to live where the jobs are and move more frequently because of an inability to afford housing or the loss of employment.Not only are these protected classes poorly paid, many are electronically redlined from hiring consideration.

As a consequence of Kenexa's "insights," its clients will pass over qualified applicants solely because they live (or don't live) in certain areas. Not only does the employer do a disservice to itself and the applicant, it increases the risk of employment litigation, with its consequent costs. (Please see From What Distance is Discrimination Acceptable?)[W]here employers turn to data mining to develop ways of improving and automating their search for good employees, they face a number of crucial choices. Like [the term] creditworthiness, the definition of a good employee is not a given. “Good” must be defined in ways that correspond to measurable outcomes: relatively higher sales, shorter production time, or longer tenure, for example.

When employers use data mining to find good employees, they are, in fact, looking for employees whose observable characteristics suggest, based on the evidence that an employer has assembled, that they would meet or exceed some monthly sales threshold, that they would perform some task in less than a certain amount of time, or that they would remain in their positions for more than a set number of weeks or months. Rather than drawing categorical distinctions along these lines, data mining could also estimate or predict the specific numerical value of sales, production time, or tenure period, enabling employers to rank rather than simply sort employees.

These may seem like eminently reasonable things for employers to want to predict, but they are, by necessity, only part of an array of possible ways of defining what “good” means. An employer may attempt to define the target variable in a more holistic way—by, for example, relying on the grades that prior employees have received in annual reviews, which are supposed to reflect an overall assessment of performance. These target variable definitions simply inherit the formalizations involved in preexisting assessment mechanisms, which in the case of human-graded performance reviews, may be far less consistent.

As previously noted, Kenexa defines a "good" employee as a function, in part, of job tenure. It then uses a number of proxies - distance from jobsite, length of time at current address, and how many times moved - to define "job tenure."

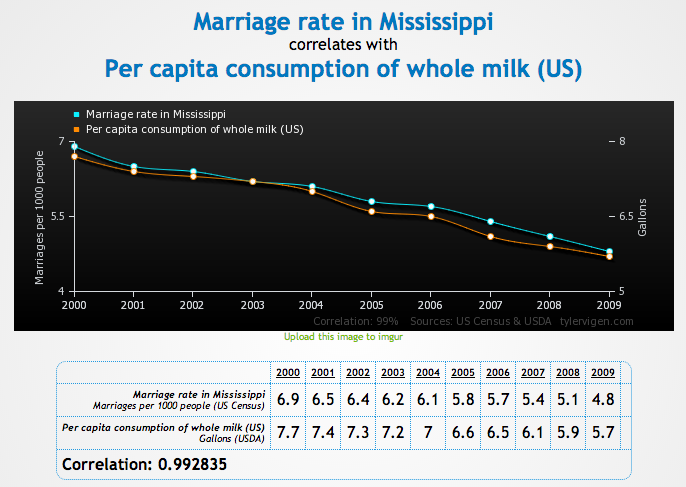

Painting with the broad brush of distance from job site, commute time and moving frequency results in well-qualified applicants being excluded, applicants who might have ended up being among the longest tenured of employees. The Kenexa findings are generalized correlations (i.e., persons living closer to the job site tend to have longer tenure than persons living farther from the job site). The insights say nothing about any particular applicant.The general lesson to draw from this discussion is that the definition of the target variable and its associated class labels will determine what data mining happens to find. While critics of data mining have tended to focus on inaccurate classifications (false positives and false negatives), as much—

if not more—danger resides in the definition of the class label itself and the subsequent labeling of examples from which rules are inferred. While different choices for the target variable and class labels can seem more or less reasonable, valid concerns with discrimination enter at this stage because the different choices may have a greater or lesser adverse impact on protected classes.

Training Data

As described above, data mining learns by example. Accordingly, what a model learns depends on the examples to which it has been exposed. The data that function as examples are known as training data: quite literally the data that train the model to behave in a certain way. The character of the training data can have meaningful consequences for the lessons that data mining happens to learn.

Discriminatory training data leads to discriminatory models.This can mean two rather different things, though:

- If data mining treats cases in which prejudice has played some role as valid examples from which to learn a decision-making rule, that rule may simply reproduce the prejudice involved in these earlier cases; and

- If data mining draws inferences from a biased sample of the populations to which the inferences are expected to generalize, any decisions that rests on these inferences may systematically disadvantage those who are under- or over-represented in the dataset.

Labeling Examples

The unavoidably subjective labeling of examples can skew the resulting findings in such a way that any decisions taken on the basis of those findings will characterize all future cases along the same lines, even if such characterizations would seem plainly erroneous to analysts who looked more closely at the individual cases. For all their potential problems, though, the labels applied to the training data must serve as ground truth.

So long as prior decisions affected by some form of prejudice serve as examples of correctly rendered determinations, data mining will necessarily infer rules that exhibit the same prejudice.

An employer currently subject to an EEOC investigation states it identified “a pool of existing employees” that Kronos, a third party assessment provider, utilized to create a customized assessment for use by the employer. The employer's reliance on that employee sample is flawed because people with mental disabilities are severely underrepresented in the existing workforce:

- According to a 2010 Kessler Foundation/NOD Survey of Employment of Americans with Disabilities conducted by Harris Interactive survey, the employment gap between people with and without disabilities has remained significant over the past 25+ years.

- According to a 2013 report of the Senate HELP Committee, Unfinished Business: Making Employment of People with Disabilities A National Priority, only 32% of working age people with disabilities participate in the labor force, as compared with 77% of working age people without disabilities. For people with mental illnesses, rates are even lower.

- The employment rate for people with serious mental illness is less than half the 33% rate for other disability groups (Anthony, Cohen, Farkas, & Gagne, 2002).

- Surveys have found that only 10% - 15% of people with serious mental illness receiving community treatment are competitively employed (Henry, 1990; Lindamer et al., 2003; Pandiani & Leno, 2011; Rosenheck et al., 2006; Salkever et al., 2007).

In Albemarle Paper Company v. Moody, 422 US 405 (1975), in which an employer implemented a test on the theory that a certain verbal intelligence was called for by the increasing sophistication of the plant's operations, the Supreme Court cited the Standards of the American Psychological Association and pointed out that a test should be validated on people as similar as possible to those to whom it will be administered. The Court further stated that differential studies should be conducted on minority groups/protected classes wherever feasible.

The use of the employer's own workforce to develop and benchmark its assessment is flawed because people with mental disabilities are severely underrepresented in the employer's workforce and the overall U.S. workforce.Not only can data mining inherit prior prejudice through the mislabeling of examples, it can also reflect current prejudice through the ongoing behavior of users taken as inputs to data mining.

This is what Latanya Sweeney discovered in a study that found that Google queries for black-sounding names were more likely to return contextual (i.e., key-word triggered) advertisements for arrest records than those for white-sounding names.

Sweeney confirmed that the companies paying for these ads had not set out to focus on black-sounding names; rather, the fact that black-sounding names were more likely to trigger such advertisements seemed to be an artifact of the algorithmic process that Google employs to determine

which advertisements to deliver alongside the results for certain queries. Although the details of the process by which Google computes the so-called “quality score” according to which it ranks advertisers’ bids is not fully known, one important factor is the predicted likelihood, based on historical trends, that users will click on an advertisement.

As Sweeney points out, the process “learns over time which ad text gets the most clicks from "viewers of the ad” and promotes that advertisement in its rankings accordingly. Sweeney posits that this aspect of the process could result in the differential delivery of advertisements that reflect the kinds of prejudice held by those exposed to the advertisements. In attempting to cater to the preferences of users, Google will unintentionally reproduce the existing prejudices that inform users’ choices.

A similar situation could conceivably arise on websites that recommend potential employees to employers, as LinkedIn does through its Talent Match feature. If LinkedIn determines which candidates to recommend on the basis of the demonstrated interest of employers in certain types of candidates, Talent Match will offer recommendations that reflect whatever biases employers happen to exhibit. In particular, if LinkedIn’s algorithm observes that employers disfavor certain candidates that are members of a protected class, Talent Match may decrease the rate at which it recommends these types of candidates to employers. The recommendation engine would learn to cater to the prejudicial preferences of employers.

Data Collection

Organizations that do not or cannot observe different populations in a consistent way and with

equal coverage will amass evidence that fails to reflect the actual incidence and relative proportion of some attribute or activity in the under- or over-observed group. Consequently, decisions that depend on conclusions drawn from this data may discriminate against members of these groups.

The data might suffer from a variety of problems: the individual records that a company maintains about a person might have serious mistakes, the records of the entire protected class of which this person is a member might also have similar mistakes at a higher rate than other groups, and the entire set of records may fail to reflect members of protected classes in accurate proportion to others. In other words, the quality and representativeness of records might vary in ways that correlate with class membership (e.g., institutions might maintain systematically less accurate, precise, timely, and complete records). Even a dataset with individual records of consistently high quality can suffer from statistical biases that fail to represent different groups in accurate proportions. Much attention has

focused on the harms that might befall individuals whose records in various commercial databases are error-ridden, but far less consideration has been paid to the systematic disadvantage that members of protected classes may suffer from being miscounted and the resulting biases in their representation

in the evidence base.

Recent scholarship has begun to stress this point. Jonas Lerman, for example, worries about “the nonrandom, systemic omission of people who live on big data’s margins, whether due to poverty, geography, or lifestyle, and whose lives are less ‘datafied’ than the general population’s.” Kate Crawford has likewise warned, “because not all data is created or even collected equally, there are ‘signal problems’ in big-data sets—dark zones or shadows where some citizens and communities are ... underrepresented.” Errors of this sort may befall historically disadvantaged groups at higher rates because they are less involved in the formal economy and its data-generating activities.

Crawford points to Street Bump, an application for Boston residents that takes advantage of accelerometers built into smart phones to detect when drivers ride over potholes (sudden movement that suggests broken road automatically prompts the phone to report the location to the city).

While Crawford praises the cleverness and cost-effectiveness of this passive approach to reporting road problems, she rightly warns that whatever information the city receives from this application will be biased by the uneven distribution of smartphones across populations in different parts of

the city. In particular, systematic differences in smartphone ownership will very likely result in the underreporting of road problems in the poorer communities where protected groups disproportionately congregate. If the city were to rely on this data to determine where it should direct its resources, it would only further underserve these communities. Indeed, the city would discriminate against those who lack the capacity to report problems as effectively as wealthier residents with smartphones.

A similar dynamic could easily apply in an employment context if members of protected classes are unable to report their interest in and qualification for jobs listed online as easily or effectively as others due to systematic differences in Internet access.

Zappos has launched a new careers site and removed all job postings. Instead of applying for jobs, persons interested in working at Zappos will need to enroll in a social network run by the company, called Zappos Insiders. The social network will allow them to network with current employees by digital Q&As, contests and other means in hopes that Zappos will tap them when jobs come open.

"Zappos Insiders will have unique access to content, Google Hangouts, and discussions with recruiters and hiring teams. Since the call-to-action is to become an Insider versus applying for a specific opening, we will capture more people with a variety of skill sets that we can pipeline for current or future openings," said Michael Bailen, Zappos’ head of talent acquisition.

In response to a question, “How can I stand out from the pack and stay front-and-center in the Zappos Recruiters’ minds?” on the Zappos' Insider site, the company lists six ways to stand out, including: using Twitter, Facebook, Instagram, Pinterest and Google Hangouts; participating in TweetChats; following Zappos’ employees on various social media platforms; and, reaching out to Zappos’ “team ambassadors.”

For the most part, all of the foregoing activities require broadband internet access and devices (tablets, smartphones, etc.) that run on those access networks. A number of protected classes will be challenged by both the broadband access and social media participation requirements:

- As noted by a PewResearch Internet Project Research report, African Americans have long been less likely than whites to have high speed broadband access at home, and that continues to be the case. Today, African Americans trail whites by seven percentage points when it comes to overall internet use (87% of whites and 80% of blacks are internet users), and by twelve percentage points when it comes to home broadband adoption (74% of whites and 62% of blacks have some sort of broadband connection at home).

- The gap between whites and blacks when it comes to traditional measures of internet and broadband adoption is pronounced. Specifically, older African Americans, as well as those who have not attended college, are significantly less likely to go online or to have broadband service at home compared to whites with a similar demographic profile.

Social medial participation is not solely a function of age. "Social media is transforming how we engage with customers, employees, jobseekers and other stakeholders," said Kathy Martinez, Assistant Secretary of Labor for Disability Employment Policy. "But when social media is inaccessible to people with disabilities, it excludes a sizeable segment of our population."

- According to the PewResearch Internet Project, even among those persons who have broadband access, the percentage of those using social media sites varies significantly by age.

Persons with disabilities (e.g., sight or hearing loss, paralysis), whether physical, mental, or developmental, face challenges accessing social media. Each of the social media platforms promoted by Zappos - Twitter, Facebook, Instagram, Pinterest, and Google Hangouts - have differing levels of support for those with disabilities (e.g., close captions or real live captions on image content that utilize sound/voice). (Please see Zappos: The Future of Hiring and Hiring Discrimination?)

To ensure that data mining reveals patterns that obtain for more than the particular sample under

analysis, the sample must share the same probability distribution as the data that would be gathered from all cases across both time and population. In other words, the sample must be proportionally representative of the entire population, even though the sample, by definition, does not include every

case.

If a sample includes a disproportionate representation of a particular class (more or less than its actual incidence in the overall population), the results of an analysis of that sample may skew in favor or against the over-or under-represented class. While the representativeness of the data is often simply assumed, this assumption is rarely justified, and is “perhaps more often incorrect than correct.”

Feature Selection

Organizations—and the data miners that work for them—also make choices about what attributes they observe and what they subsequently fold into their analyses. Data miners refer to the process of settling on the specific string of input variables as “feature selection.” Members of protected classes may find that they are subject to systematically less accurate classifications or predictions because the details necessary to achieve equally accurate determinations reside at a level of granularity and coverage that the features fail to achieve.

analysis, the sample must share the same probability distribution as the data that would be gathered from all cases across both time and population. In other words, the sample must be proportionally representative of the entire population, even though the sample, by definition, does not include every

case.

If a sample includes a disproportionate representation of a particular class (more or less than its actual incidence in the overall population), the results of an analysis of that sample may skew in favor or against the over-or under-represented class. While the representativeness of the data is often simply assumed, this assumption is rarely justified, and is “perhaps more often incorrect than correct.”

Feature Selection

Organizations—and the data miners that work for them—also make choices about what attributes they observe and what they subsequently fold into their analyses. Data miners refer to the process of settling on the specific string of input variables as “feature selection.” Members of protected classes may find that they are subject to systematically less accurate classifications or predictions because the details necessary to achieve equally accurate determinations reside at a level of granularity and coverage that the features fail to achieve.

This problem stems from the fact that data are by necessity reductive representations of an infinitely more specific real-world object or phenomenon. At issue, really, is the coarseness and comprehensiveness of the criteria that permit statistical discrimination and the uneven rates at which different groups happen to be subject to erroneous determinations. Crucially, these erroneous and potentially adverse outcomes are artifacts of statistical reasoning rather than prejudice on the part of decision-makers or bias in the composition of the dataset. As Frederick Schauer explains, decision-makers that rely on statistically sound but nonuniversal generalizations “are being simultaneously rational and unfair” because certain individuals are “actuarially saddled” by statistically sound inferences that are nevertheless inaccurate

To take an obvious example, hiring decisions that consider credentials tend to assign enormous weight to the reputation of the college or university from which an applicant has graduated, despite the fact that such credentials may communicate very little about the applicant’s job-related skills and competencies. If equally competent members of protected classes happen to graduate from these colleges or universities at disproportionately low rates, decisions that turn on the credentials conferred by these schools, rather than some set of more specific qualities that more accurately sort individuals, will incorrectly and systematically discount these individuals.

Kenexa, an assessment company owned by IBM and used by hundreds of employers, believes that a lengthy commute raises the risk of attrition in call-center and fast-food jobs. It asks applicants for those jobs to describe their commute by picking options ranging from "less than 10 minutes" to "more than 45 minutes." According to Kenexa’s Jeff Weekley, in a September 20, 2012 article in The Wall Street Journal, “The longer the commute, the lower their recommendation score for these jobs.” Applicants are also asked how long they have been at their current address and how many times they have moved. People who move more frequently "have a higher likelihood of leaving," Mr. Weekley said.

A 2011 study by the Center for Public Housing found that poor and near-poor families tend to move more frequently than the general population. A wide range of often complex forces appears to drive this mobility:

- the formation and dissolution of households;

- an inability to afford one’s housing costs;

- the loss of employment;

- lack of quality housing; or

- a safer neighborhood.

According to the U.S. Census, lower-income persons are disproportionately female, black, Hispanic, and mentally ill.

Painting with the broad brush of distance from work, commute time and moving frequency results in well-qualified applicants being excluded from employment consideration. Importantly, the workforce insights of companies like Kenexa are based on data correlations - they say nothing about a particular person.

The application of these insights means that many low-income persons are electronically redlined. Employers do not even interview, let alone hire, qualified applicants because they live in certain areas or because they have moved. The reasons for moving do not matter, even if it was to find a better school for their children, to escape domestic violence or due to job loss from a plant shutdown.

When Clayton County, Georgia killed its bus system in 2010, it had nearly 9,000 daily riders. Many of those riders used the service to commute to their jobs. The transit shutdown increased commuting times (as persons found alternate ways to get to work) and led to more housing mobility (as persons relocated to be closer to their jobs to mitigate commuting time). Though no fault of their own, the impact of increasing the former bus riders commuting time or moving their residence made them less attractive job candidates to the many employers who use companies like Kenexa.

Making Decisions on the Basis of the Resulting Model

Cases of decision-making that do not artificially introduce discriminatory effects into the data mining process may nevertheless result in systematically less favorable determinations for members of protected classes. Situations of this sort are possible when the criteria that are genuinely relevant in making rational and well-informed decisions also happen to serve as reliable proxies for class membership. In other words, the very same criteria that correctly sort individuals according to their

predicted likelihood of excelling at a job—as formalized in some fashion— may also sort individuals according to class membership.

Cases of decision-making that do not artificially introduce discriminatory effects into the data mining process may nevertheless result in systematically less favorable determinations for members of protected classes. Situations of this sort are possible when the criteria that are genuinely relevant in making rational and well-informed decisions also happen to serve as reliable proxies for class membership. In other words, the very same criteria that correctly sort individuals according to their

predicted likelihood of excelling at a job—as formalized in some fashion— may also sort individuals according to class membership.

For example, employers may find, in conferring greater attention and opportunities to employees that they predict will prove most competent at some task, that they subject members of protected groups to consistently disadvantageous treatment because the criteria that determine the attractiveness of employees happen to be held at systematically lower rates by members of these groups. Decision-makers do not necessarily intend this disparate impact because they hold prejudicial beliefs; rather, their reasonable priorities as profit-seekers unintentionally recapitulate the inequality that happens to exist in society. Furthermore, this may occur even if proscribed criteria have been removed from the dataset, the data are free from latent prejudice or bias, the data is especially granular and diverse, and the only goal is to maximize classificatory or predictive accuracy.

The problem stems from what researchers call “redundant encodings”: cases in which membership in a protected class happens to be encoded in other data. This occurs when a particular piece of data or certain values for that piece of data are highly correlated with membership in specific protected classes. The fact that these data may hold significant statistical relevance to the decision at hand explains why data mining can result in seemingly discriminatory models even when its only objective is to ensure the greatest possible accuracy for its determinations. If there is a disparate distribution of an attribute, a more precise form of data mining will be more likely to capture it as such. Better data and more features will simply expose the exact extent of inequality.

Data mining could also breathe new life into traditional forms of intentional discrimination because decision-makers with prejudicial views can mask their intentions by exploiting each of the mechanisms enumerated above. Stated simply, any form of discrimination that happens unintentionally can be orchestrated intentionally as well.

For instance, decision-makers could knowingly and purposefully bias the collection of data to ensure that mining suggests rules that are less favorable to members of protected classes. They could likewise attempt to preserve the known effects of prejudice in prior decision-making by insisting that such decisions constitute a reliable and impartial set of examples from which to induce a decision-making rule. And decision-makers could intentionally rely on features that only permit coarse-grain distinction-making—distinctions that result in avoidable and higher rates of erroneous determinations for members of a protected class.

Because data mining holds the potential to infer otherwise unseen attributes, including those traditionally deemed sensitive, it can furnish methods by which to determine indirectly individuals’ membership in protected classes and to unduly discount, penalize, or exclude such people accordingly. In other words, data mining could grant decision-makers the ability to distinguish and disadvantage members of protected classes without access to explicit information about individuals’ class membership. It could instead help to pinpoint reliable proxies for such membership and thus place institutions in the position to automatically sort individuals into their respective class without ever having to learn these facts directly.

For instance, decision-makers could knowingly and purposefully bias the collection of data to ensure that mining suggests rules that are less favorable to members of protected classes. They could likewise attempt to preserve the known effects of prejudice in prior decision-making by insisting that such decisions constitute a reliable and impartial set of examples from which to induce a decision-making rule. And decision-makers could intentionally rely on features that only permit coarse-grain distinction-making—distinctions that result in avoidable and higher rates of erroneous determinations for members of a protected class.

Because data mining holds the potential to infer otherwise unseen attributes, including those traditionally deemed sensitive, it can furnish methods by which to determine indirectly individuals’ membership in protected classes and to unduly discount, penalize, or exclude such people accordingly. In other words, data mining could grant decision-makers the ability to distinguish and disadvantage members of protected classes without access to explicit information about individuals’ class membership. It could instead help to pinpoint reliable proxies for such membership and thus place institutions in the position to automatically sort individuals into their respective class without ever having to learn these facts directly.