Clear Windshield or Rearview Mirror?

Algorithms embody a profound deference to precedent; they draw on the past to act on (and enact) the future. The apparent omniscience of big data may in truth be nothing more than misdirection. Instead of offering a clear windshield, the big data phenomenon may be more like a big rear-view mirror telling us nothing about the future.

Does this deference to precedent result in a self-reinforcing and self-perpetuating system, where individuals are burdened by a history that they are encouraged to repeat and from which they are unable to escape?

Already burdened segments of the population can become further victimized through the use of sophisticated algorithms in support of the identification, classification, segmentation, and targeting of individuals as members of analytically constructed groups. In creating these groups, the algorithms rely upon correlations that lead to viewing people as members of populations, or categories, or groups, rather than as individuals (i.e., persons who live more than X miles from an employer's location). Please see From What Distance is Discrimination Acceptable?

Just as neighborhoods can serve as a proxy for racial or ethnic identity, there are new worries that big data technologies could be used to “digitally redline” unwanted groups, either as customers, employees, tenants, or recipients of credit. A significant finding of the White House report is that big data could enable new forms of discrimination.

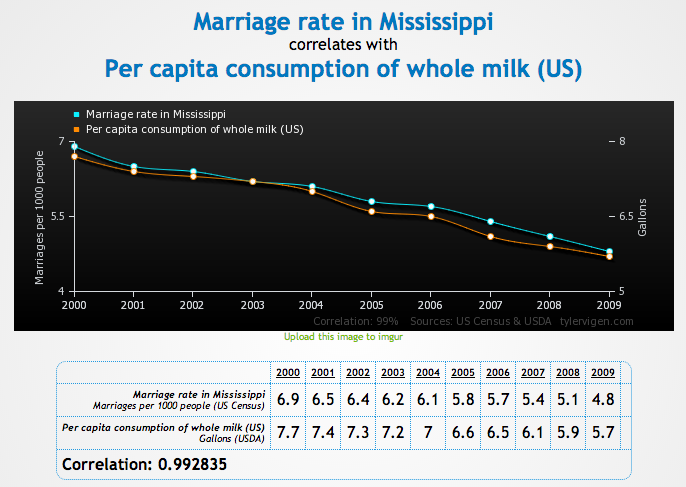

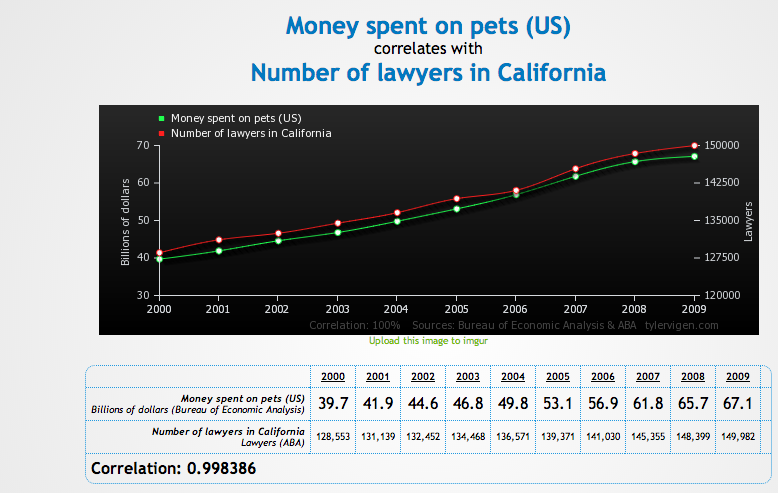

Correlation Does Not Equal Causation

Decisions made or affected by correlation are inherently flawed. Correlation does not equal causation. This point is made vividly by Tyler Vigen, a law student at Harvard who, in his spare time, put together a website that finds very, very high correlations - as shown below - between things that are absolutely not related.

Shrouding Opacity In The Guise of Legitimacy

Some of the most profound challenges revealed by the White House Report concern how big data analytics may lead to disparate inequitable treatment, particularly of disadvantaged groups, or create such an opaque decision-making environment that individual autonomy is lost in an impenetrable set of algorithms.

Workforce analytic systems, designed in part to mitigate risks for employers, have become sources of material risk, both to job applicants and employers. The systems create the perception of stability through probabilistic reasoning and the experience of accuracy, reliability, and comprehensiveness through automation and presentation. But in so doing, technology systems draw attention away from uncertainty and partiality. Please see Workforce Science: A Critical Look at Big Data and the Selection and Management of Employees.

Moreover, they shroud opacity—and the challenges for oversight that opacity presents—in the guise of legitimacy, providing the allure of shortcuts and safe harbors for actors both challenged by resource constraints and desperate for acceptable means to demonstrate compliance with legal mandates and market expectations.

Moreover, they shroud opacity—and the challenges for oversight that opacity presents—in the guise of legitimacy, providing the allure of shortcuts and safe harbors for actors both challenged by resource constraints and desperate for acceptable means to demonstrate compliance with legal mandates and market expectations.

Programming and mathematical idiom (e.g., correlations) can shield layers of embedded assumptions from higher level decisionmakers at an employer who are charged with meaningful oversight and can mask important concerns with a veneer of transparency.

This problem is compounded in the case of regulators outside the firm, who frequently lack the resources or vantage to peer inside buried decision processes. In recognition of this problem, the White House Report states that "[t]he federal government must pay attention to the potential for big data technologies to facilitate discrimination inconsistent with the country’s laws and values" and, as one of the six policy recommendations in the report, :

This problem is compounded in the case of regulators outside the firm, who frequently lack the resources or vantage to peer inside buried decision processes. In recognition of this problem, the White House Report states that "[t]he federal government must pay attention to the potential for big data technologies to facilitate discrimination inconsistent with the country’s laws and values" and, as one of the six policy recommendations in the report, :

The federal government’s lead civil rights and consumer protection agencies, including the Department of Justice, the Federal Trade Commission, the Consumer Financial Protection Bureau, and the Equal Employment Opportunity Commission, should expand their technical expertise to be able to identify practices and outcomes facilitated by big data analytics that have a discriminatory impact on protected classes, and develop a plan for investigating and resolving violations of law in such cases. In assessing the potential concerns to address, the agencies may consider the classes of data, contexts of collection, and segments of the population that warrant particular attention, including for example genomic information or information about people with disabilities.

No comments:

Post a Comment

Because I value your thoughtful opinions, I encourage you to add a comment to this discussion. Don't be offended if I edit your comments for clarity or to keep out questionable matters, however, and I may even delete off-topic comments.